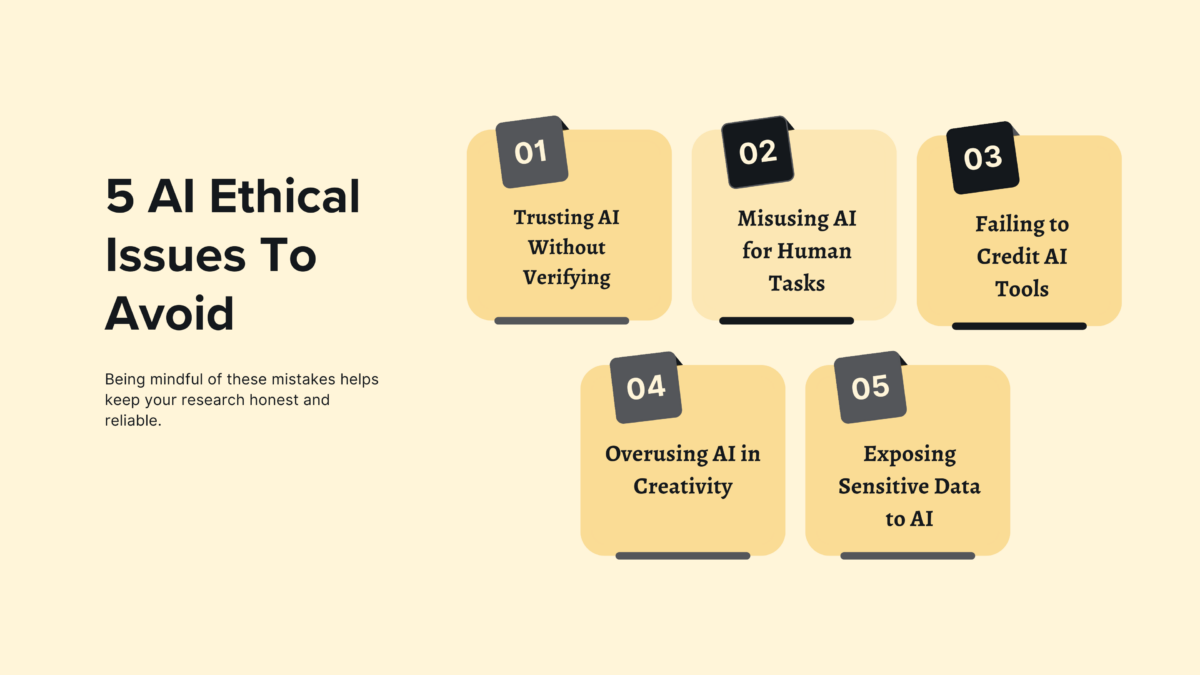

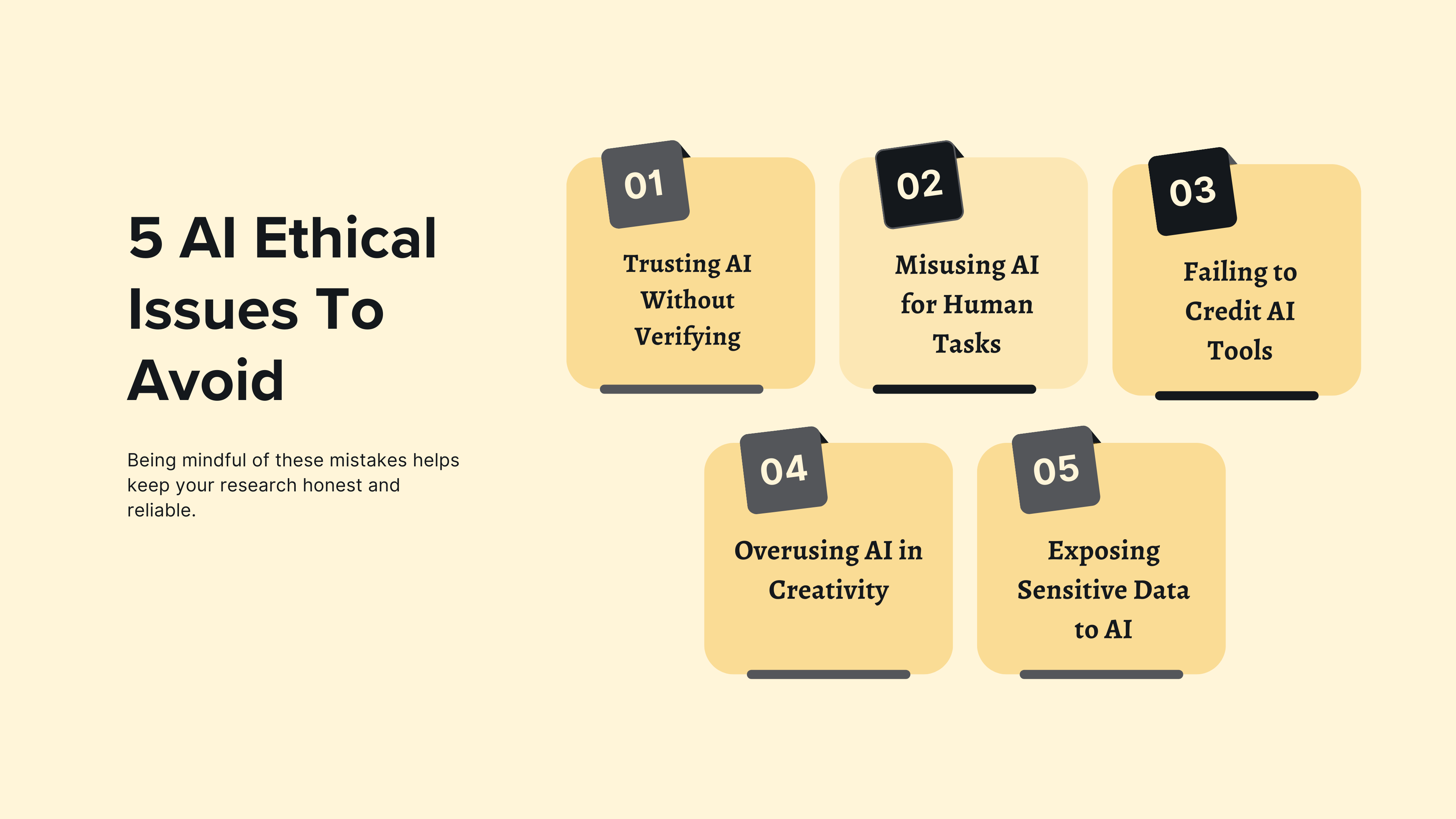

In a recent blog, we discussed responsible AI in research and why it matters. Now, we’ll discuss some AI ethical issues and what you should not be doing with AI in your research journey. This blog looks at common mistakes people make with AI in research, explains why they happen, and offers practical tips to avoid them.

1. Trusting AI Outputs Without Checking Them

One big AI ethical issue is trusting everything AI tools generate without taking the time to verify it. AI models like ChatGPT can produce convincing answers, but they’re not always accurate. In research, this can lead to spreading incorrect information or drawing the wrong conclusions.

Why It Happens: AI systems learn from existing data, which might include errors or biases. As a result, they can unintentionally repeat those issues.

What You Can Do: Treat AI-generated content as a helpful draft, not the final word. Always double-check the information with reliable sources.

2. Using AI for Tasks That Require Human Judgment

Relying on AI for decisions that need a human touch, like reviewing academic papers, is risky. These tasks often require context and empathy, which AI doesn’t have.

Why It Happens: AI seems efficient, but it doesn’t understand the subtleties of human situations, leading to potential AI ethical issues in judgment and fairness.

What You Can Do: Let AI assist with organizing or summarizing information, but make sure a person is involved in decisions that affect others.

3. Not Giving Credit to AI Tools

Even when AI is used responsibly, failing to acknowledge its role can mislead readers about the originality of your work.

Why It Happens: People might not think of AI as a source that needs to be cited, overlooking important AI ethical issues related to transparency and attribution.

What You Can Do: Treat AI tools like any other resource. Check your institution’s or publisher’s guidelines for how to cite them properly.

4. Over-Reliance on AI for Creative Thinking

AI can handle repetitive tasks, but depending on it too much can stifle human creativity. Research often involves brainstorming new ideas, which AI can’t do as well as people.

Why It Happens: AI makes routine tasks more manageable, so letting it take over more complex ones is tempting.

What You Can Do: Use AI to free up critical thinking and creative problem-solving time. Let it handle the busy work while you focus on the bigger picture to avoid these AI ethical issues.

5. Giving AI Access to Sensitive Data

Allowing AI tools to access personal information without proper permission can pose serious security risks.

Why It Happens: Some AI tools require access to data to function effectively, but their security measures might not be sufficient leading to potential AI ethical issues.

What You Can Do: Limit the data AI tools can access. Use platforms with strong security features and comply with data protection regulations.

Final Thoughts

AI can be a valuable tool for researchers, but it’s not without its challenges. Many of these challenges stem from AI ethical issues that arise when AI is misused or misunderstood. By understanding these common mistakes and taking steps to address them, you can use AI responsibly and effectively. The key is to see AI as an assistant that complements human effort, not a replacement.