Artificial Intelligence (AI) is changing how we live, work, and learn. However, as AI continues to evolve, it is important to ensure it is developed and used responsibly. In this blog, we’ll explore what responsible AI means, why it is essential, and how tools like ZAIA, Zendy’s AI assistant for researchers, implement these principles in the academic sector.

What are Responsible AI Principles?

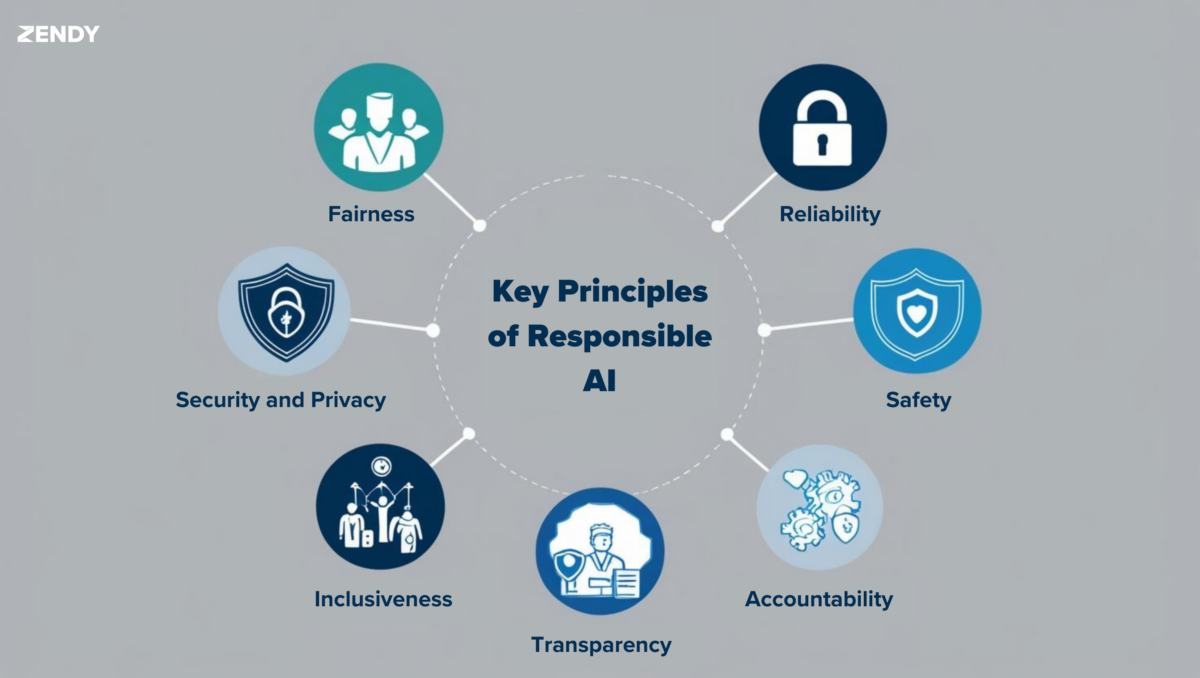

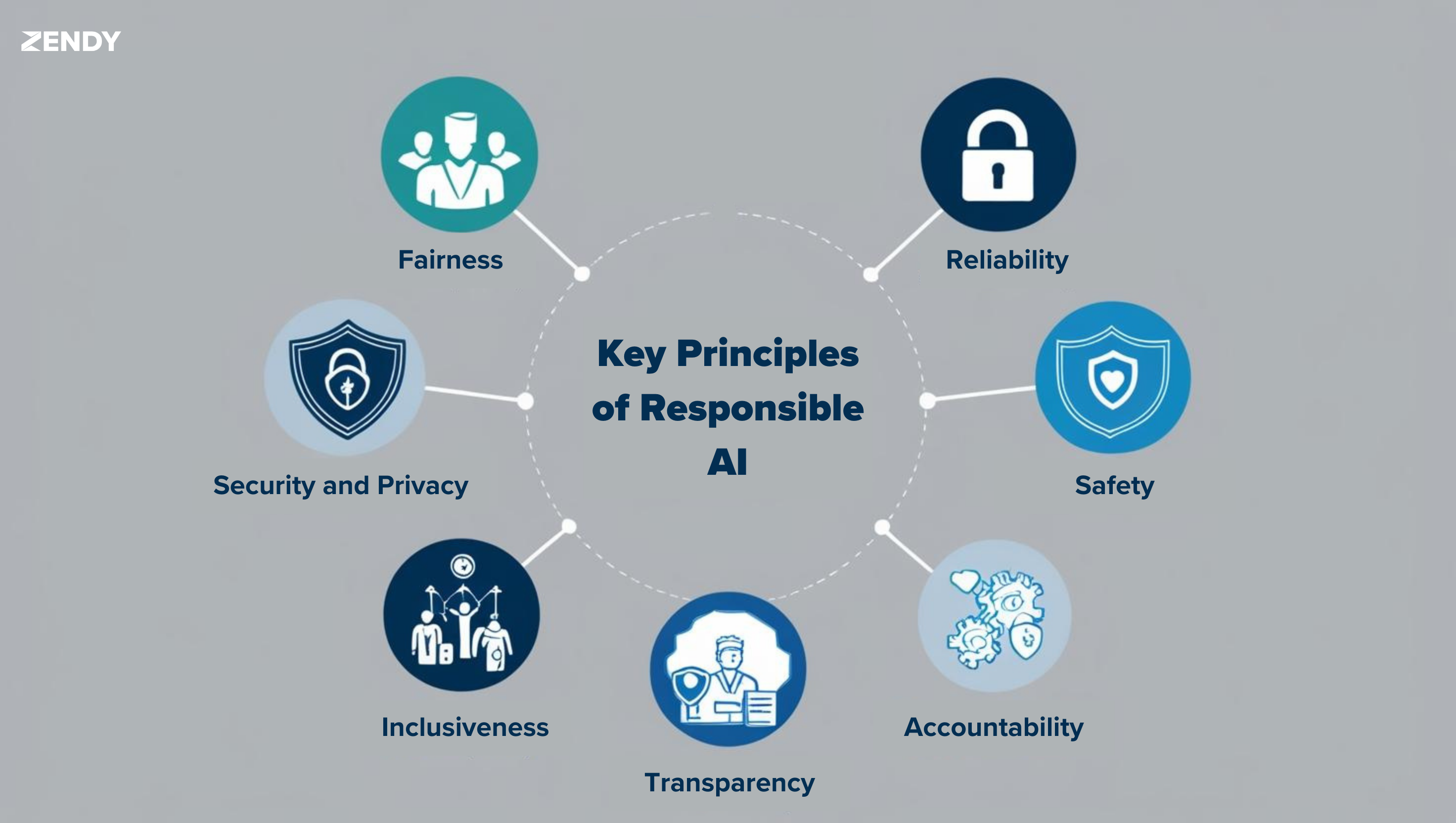

Responsible AI principles, also known as ethical AI, refer to building and using AI tools guided by key principles:

- Fairness

- Reliability

- Safety

- Privacy and Security

- Inclusiveness

- Transparency

- Accountability

AI vs Responsible AI: Why Does Responsible AI Principles Matter?

Keep in mind that AI is not a human being.

This means it lacks the ability to comprehend ethical standards or a sense of responsibility in the same way humans do. Therefore, ensuring these concepts are embedded in the development team before creating the tool is more important than building the tool itself.

In 2016, Microsoft launched a Twitter chatbot called “Tay“, a chatbot designed to entertain 18- to 24-year-olds in the US to explore the conversational capabilities of AI. Within just 16 hours, the tool’s responses turned toxic, racist, and offensive due to being fed harmful and inappropriate content by some Twitter users. This led to the immediate shutdown of the project, followed by an official apology from the development team.

In such cases, “Tay” lacked ethical guidelines to help it differentiate harmful content from appropriate content. For this reason, it is crucial to train AI tools on clear principles and ethical frameworks that enable them to produce more responsible outputs.

The development process should also include designing robust monitoring systems to continuously review and update the databases’ training, ensuring they remain free of harmful content. Overall, the more responsible the custodian is, the better the child’s behaviour will be.

The Challenges and The Benefits of Responsible AI

A responsible AI framework is not a “nice-to-have” feature, it’s a foundational set of principles that every AI-based tool must implement. Here’s why:

- Fairness: By addressing biases, responsible AI ensures every output is relevant and fair for all society’s values.

- Trust: Transparency in how AI works builds trust among users.

- Accountability: Developers and organisations adhere to high standards, continuously improving AI tools and holding themselves accountable for their outcomes. This ensures that competition centers on what benefits communities rather than simply what generates more revenue.

Implementing responsible AI principles comes with its share of challenges:

- Biased Data: AI systems often learn from historical data, which may carry biases. This can lead to skewed outcomes, like underrepresenting certain research areas or groups.

- Awareness Gaps: Not all developers and users understand the ethical implications of AI, making education and training critical.

- Time Constraints: AI tools are sometimes developed rapidly, bypassing essential ethical reviews, which increases the risk of errors.

Responsible AI Principles and ZAIA

ZAIA, Zendy’s AI-powered assistant for researchers, is built with a responsible AI framework in mind. Our AI incorporates the six principles of responsible AI, fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability, to meet the needs of students, researchers, and professionals in academia. Here’s how ZAIA addresses these challenges:

- Fairness: ZAIA ensures balanced and unbiased recommendations, analysing academic resources from diverse disciplines and publishers.

- Reliability and Safety: ZAIA’s trained model is rigorously tested to provide accurate and dependable insights, minimising errors in output.

- Transparency: ZAIA’s functionality is clear and user-friendly, helping researchers understand and trust its outcomes.

- Accountability: Regular updates improve ZAIA’s features, addressing user feedback and adapting to evolving academic needs.

Conclusion

Responsible AI principles are the foundation for building ethical and fair systems that benefit everyone. ZAIA is Zendy’s commitment to this principle, encouraging users to explore research responsibly and effectively. Whether you’re a student, researcher, or professional, ZAIA provides a reliable and ethical tool to enhance your academic journey.

Discover ZAIA today. Together, let’s build a future where AI serves as a trusted partner in education and beyond.